Artificial Intelligence

What, how and why?

Data-driven Learning has come a long way to facilitate almost every area of science, as a means of developing and optimizing models not possible before.

I'm interested in developing experimental and computational tools that can be used in conjunction with data-driven machine learning methods to design, model, and build smart programmable structures. This requires taking a highly interdisciplinary approach combining computation, robotics, and machine learning towards the ultimate goal of uncovering the mechanics of solids and structures, as well as applications in space logistics and mission design optimization.

Practical Experience

Virtually every research project I took part applied some kind of computational or statistical analysis. But here, I want to emphasize two important experiences I've had that made direct use of AI & Deep Learning techniques: my year as a professor and data scientist in a coding company, Let's Code Academy, and the use of machine learning regression models in my research with Dr. Antonio Prado.

Teacher at Let's Code Academy

My experience as a programming teacher allowed me to not only teach, but also learn extensively, from the basics of coding to the most advanced Machine Learning algorithms. Even though my strongest languages in my undergraduate studies were C, Fortran and Matlab, I got to professionally teach Python from an amalgam of approaches: I started with an intensive leveling course of 24 hours, Coding Tank, moving to a more complete view of the language as the teacher of a 48-hour training of Python in 4 different companies, spanning from the its basics to an introduction to Data Science and Machine Learning.

Then, I had the opportunity to create from scratch and teach a MOOC specialized in finance data analysis called Python for Bloomberg, inside our own learning platform, in partnership with Bloomberg, the company responsible for the most popular finance platform in the market. I also taught Python for Finance twice, which starts after Python and covers Data Science, Machine Learning and Time Series Analysis libraries.

Next, I went through extensive training as the assistant of yet another, more advanced course, Data Science & Artificial Intelligence, covering roughly everything you need to know about Data Science and Machine Learning. I also started my participation in the Data Squad, further advancing my skills by structuring algorithms for database queries, data manipulation, data visualization and Machine Learning modeling, which allowed me to quickly become the teacher of the DS & AI course 2 months later.

Data Scientist at Let's Code Academy

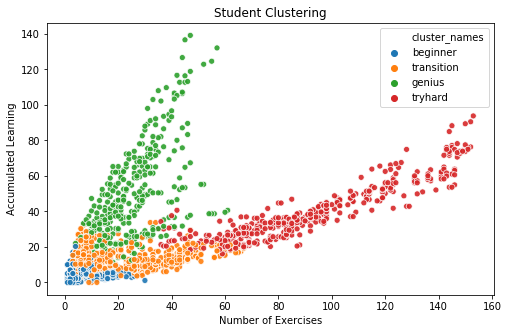

As a data scientist, I could learn from a team of outstanding computer scientists and engineers. The main project I developed was an Artificial Intelligence for the company's Learning Management System, which aimed at recommending exercises to a student in their learning journey through Reinforcement Learning models. The first step was clustering students into four groups, which we named "genius", "tryhard", "transition" and "beginner". These clusters were made using variables such as the number of exercises the student completes, as well as the difficulty of each exercise and the time he puts into the Learning Management System. Then, another metric is created for the Reinforcement Learning algorithm: the accumulated learning, that merges the students' grade and the difficulty of an exercise in order to measure the reward used in the model.

Student clustering for the Reinforcement Learning recommendation system project.

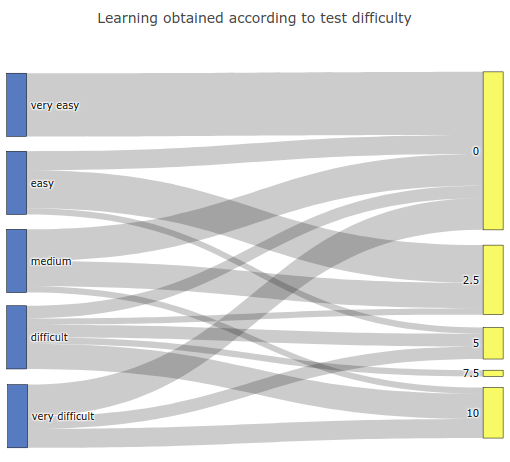

The data from each student cluster was then used by a Multi-Armed Bandit Agent (i.e. the Artificial Intelligence) responsible for evaluating which exercise difficulty best fits the student profile. The figure below shows how a test's difficulty (very easy to very hard) can affect the learning outcome for a student (represented in 0-10 scale with 2.5 increments). Here, thicker lines indicate more expressive outcomes:

Schematization of the accumulated learning obtained according to test difficulty for the "genius" student cluster.

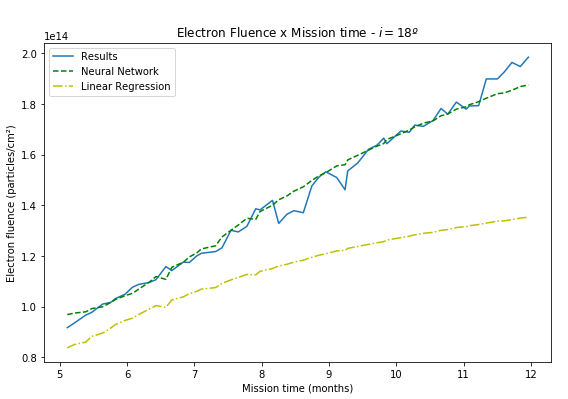

Neural network regression on Astrodynamics

My research on Radiation Exposure Optimization in Low Thrust Transfers culminated in the radiation absorption prediction for a spacecraft in a transfer to the Moon, given a set of initial parameters. In other words: a regression. I started modeling a simple Linear Regression, but evolved the model to a Support Vector Machine and, finally, a Neural Network. I cared for optimizing the hyperparameters through a Grid Search, creating a Pipeline and using feature scaling. Once the model was plotted against the simulation results, it was clear that it fitted much better the data than the linear regression.

Regression model comparison for the amount of electrons a spacecraft absorbs given a set of parameters (Propulsion System, initial eccentricity, height, etc).

Deep Learning Specialization

Through the 5 courses of the Deep Learning Specialization, I could build upon my experience with Machine Learning to a more advanced realm: Deep Neural Networks. The near 84-hour courses provided me with a much better technical expertise of the area and were filled with practical assignments that developed deep Neural Network algorithms from scratch together with very interesting applications. I have selected a few of the guided projects from the specialization below in order to show what I learned.

The architecture behind a deep Neural Network for cat detection.

An autonomous car Convolutional Neural Network that detects 80 classes of objects (cars, people, etc).

A regularized Neural Network that recommends positions where the goal keeper should kick the ball so the players can advance with it.

An art generation Convolutional Neural Network that merges a painting's art style with any picture.

The architecture behind a Recurrent Neural Network that translates human readable dates into machine readable dates.

Academic Background

STAT 598 - Statistical Machine Learning

ACMS 30440 - Probability and Statistics

PHYS 60203 - Statistical Analysis Techniques for Modern Astronomy

MAC0115 - Introduction to Computing for Exact Sciences and Technology

AGA0503 - Numerical Methods in Astronomy

Improving Deep Neural Networks: Hyperparameter Tuning, Regularization and Optimization

Prof. Qi Guo - Purdue University (60 hours)

This is a graduate-level machine learning course at Purdue ECE. Compared to the various machine learning classes offered on the Internet, this one will focus on the mathematics behind some traditional and fundamental topics in machine learning. The goal of this class is to help students gain a deeper understanding of the mathematical intuition and connection behind a variety of machine learning methods rather than programming per se. The four clusters of topics that will be covered in this course are listed below.

Mathematical Preliminaries. Matrices, vectors, Lp norm, geometry of the norms, symmetry, positive definiteness, eigen-decomposition. Unconstrained optimization, graident descent, convex functions, Lagrange multipliers, linear least squares. Probability space, random variables, joint distributions, multi-dimensional Gaussians.

Linear/nonlinear Classifiers. Linear discriminant analysis, separating hyperplane, multi-class classification, Bayesian decision rule, geometry of Bayesian decision rule, linear regression, logistic regression, perceptron algorithms, support vector machines, nonlinear transformations, intro to neural networks.

Learning Theory. Bias and variance, training and testing, generalization, PAC framework, Hoeffding inequality, VC dimension.

Robustness. Adversarial attack, targeted and untargeted attack, minimum distance attack, maximum loss attack, regularization-based attack. Perturbation through noises. Robustness of SVM.

Prof. Molly Walsh - University of Notre Dame (45 hours)

An introduction to the theory of probability and statistics, with applications to the computer

sciences and engineering. Topics include discrete and continuous random variables, joint

probability distributions, the central limit theorem, point and interval estimation and hypothesis

testing.

Prof. Timothy Beers - University of Notre Dame (45 hours)

Binomial, Gaussian, Lorentzian and Poisson distributions; Bayesian inference; Error analysis and propagation; Testing for correlation, Partial correlation, Multivariable correlations; Principal component analysis; Parametric tests: mean, variances, t and F tests; Monte Carlo tests; Chi-square test for goodness of fit; Maximum-likelihood method; Least-Squares fit: straight line, polynomial, and arbitrary functions; Bootstrap and jackknife

Prof. Leliane de Barros - University of São Paulo (60 hours)

Brief history of computing. Algorithms: characterization, notation, basic structures, pseudo-code instructions. Computers: basic units, instructions, addressing. Concepts of algorithmic languages: expressions, sequential, selective and repetitive commands; entrance exit; structured variables, functions. Program development and documentation. Examples of non-numerical processing. Extensive programming and program debugging practice.

Prof. Alex Carciofi - University of São Paulo (60 hours)

Introduction. Numerical calculation concepts: representation of real and complex numbers, matrices and vectors; precision, floating point arithmetic; rounding errors. Linear matrices and systems: elimination of Gauss-Jordan, iterative algorithms. Interpolation and extrapolation: polynomial interpolation, by cubic and Laplace splines; multi-dimensional interpolation and extrapolation. Calculation of functions: series and convergence; transcendental equations. Numerical differentiation: Chebyshev approximation. Numerical integration 1: trapezoid rule; classic formulas. Numerical integration 2: Romberg integration; Gaussian squares; multi-dimensional integrals. Function zeros: Newton's successive approximation and interval bisection methods. Minimum and maximum functions: Brent method. Random numbers: uniform, exponential and Gaussian distributions. Eigenvalues and eigenvectors. Search and selection mechanisms. Fourier transforms: fast fourier transform (FFT). Each item will be addressed considering applications in Astronomy.

Prof. Andrew Ng - DeepLearning.AI (on Coursera) (~20 hours)

In this course, you will learn the foundations of deep learning. When you finish this class, you will:

Understand the major technology trends driving Deep Learning;

Be able to build, train and apply fully connected deep neural networks;

Know how to implement efficient (vectorized) neural networks;

Understand the key parameters in a neural network's architecture;

This course also teaches you how Deep Learning actually works, rather than presenting only a cursory or surface-level description. So after completing it, you will be able to apply deep learning to a your own applications. If you are looking for a job in AI, after this course you will also be able to answer basic interview questions.

This is the first course of the Deep Learning Specialization.

Prof. Andrew Ng - DeepLearning.AI (on Coursera) (~18 hours)

After 3 weeks, you will:

- Understand industry best-practices for building deep learning applications.

- Be able to effectively use the common neural network "tricks", including initialization, L2 and dropout regularization, Batch normalization, gradient checking,

- Be able to implement and apply a variety of optimization algorithms, such as mini-batch gradient descent, Momentum, RMSprop and Adam, and check for their convergence.

- Understand new best-practices for the deep learning era of how to set up train/dev/test sets and analyze bias/variance

- Be able to implement a neural network in TensorFlow.

This is the second course of the Deep Learning Specialization.

Prof. Andrew Ng - DeepLearning.AI (on Coursera) (~5 hours)

Much of this content has never been taught elsewhere, and is drawn from experience building and shipping many deep learning products. This course also has two "flight simulators" that let you practice decision-making as a machine learning project leader. This provides "industry experience" that you might otherwise get only after years of ML work experience.

After 2 weeks, you will:

- Understand how to diagnose errors in a machine learning system;

- Be able to prioritize the most promising directions for reducing error;

- Understand complex ML settings, such as mismatched training/test sets, and comparing to and/or surpassing human-level performance;

- Know how to apply end-to-end learning, transfer learning, and multi-task learning.

I've seen teams waste months or years through not understanding the principles taught in this course. I hope this two week course will save you months of time.

This is the third course in the Deep Learning Specialization.

Prof. Andrew Ng - DeepLearning.AI (on Coursera) (~20 hours)

This course will teach you how to build convolutional neural networks and apply it to image data. Thanks to deep learning, computer vision is working far better than just two years ago, and this is enabling numerous exciting applications ranging from safe autonomous driving, to accurate face recognition, to automatic reading of radiology images.

You will:

- Understand how to build a convolutional neural network, including recent variations such as residual networks.

- Know how to apply convolutional networks to visual detection and recognition tasks.

- Know to use neural style transfer to generate art.

- Be able to apply these algorithms to a variety of image, video, and other 2D or 3D data.

This is the fourth course of the Deep Learning Specialization.

Prof. Andrew Ng - DeepLearning.AI (on Coursera) (~16 hours)

This course will teach you how to build models for natural language, audio, and other sequence data. Thanks to deep learning, sequence algorithms are working far better than just two years ago, and this is enabling numerous exciting applications in speech recognition, music synthesis, chatbots, machine translation, natural language understanding, and many others.

You will:

- Understand how to build and train Recurrent Neural Networks (RNNs), and commonly-used variants such as GRUs and LSTMs.

- Be able to apply sequence models to natural language problems, including text synthesis.

- Be able to apply sequence models to audio applications, including speech recognition and music synthesis.

This is the fifth and final course of the Deep Learning Specialization.

Prof. Sharon Zhou - DeepLearning.AI (on Coursera) (~33 hours)

In this course, you will:

- Learn about GANs and their applications

- Understand the intuition behind the fundamental components of GANs

- Explore and implement multiple GAN architectures

- Build conditional GANs capable of generating examples from determined categories

Prof. Rodrigo Schmitt - Let's Code Academy (48 hours)

1) Input and output: Reading user input and printing data.

2) Variables: Declaration and initialization of variables and data types: int, float, boolean, etc.

3) Conditional Structures: If, else and elif logical conditionals for building algorithms.

4) Loops: Repetition of blocks of code in for loops and while.

5) Data Structures: Manipulation of linked data in the form of lists, tuples and dictionaries.

6) JSON: Representation of data as JavaScript Object Notation.

7) Files and Data Persistence: Reading and writing data to files.

8) Functions: Function declaration, input and return parameters. Global and local variables.

9) Strings: Text manipulation.

10) Object Oriented: Paradigm of object-oriented programming, declaration of classes, attributes, methods, inheritance, etc.

11) Graphic interface: Construction of GUI (graphical user interface) with the tKinter library.

12) APIs and Web Scraping:Requesting web pages via HTTP library and Web Scraping (extracting information from a web page) via BeautifulSoup library.

Prof. Rodrigo Schmitt - Let's Code Academy (24 hours)

Pandas: Work with Pandas to implement mathematical operations and structure data with pandas.Series and pandas.DataFrame.

Visualization with Matplotlib: Production of charts and graph boards for prospecting insights and storytelling with data.

Statistic: Basic notions of data collection (sample and population) and sample design. Variables and distribution of variables and their comparisons.

Time series: Introduce the basic elements for the analysis of time series, such as trend, seasonality, autocorrelation and sliding windows.

Portfolio Management: Learn how to calculate returns, volatility, Black-Scholes, Capital Asset Pricing Model and Value at Risk.

Regression and autoregression: Create linear regression models in one or more variables and autoregression models for forecasting time series, such as AR, AM, ARMA and ARIMA.

ARCH and GARCH: Learn the ARCH and GARCH models for forecasting time series.

Classification: Apply the main supervised learning models, such as KNN, Naive Bayes, Decision Trees, Random Forest and Neural Networks to perform and test classifications.

Prof. Rodrigo Schmitt - Let's Code Academy (48 hours)

1) Getting started with Pandas: Work with Pandas to implement mathematical operations and structure data with pandas.Series and pandas.DataFrame.

2) Data manipulation with Pandas: Data wrangling and feature engineering.

3) The Matplotlib module: Production of charts and graph boards for prospecting insights and storytelling with data.

4) Professional graphics with Seaborn and Plotly: Learn how to create more elegant graphics with Seaborn and interactive graphics with Plotly.

5) Fundamentals of statistical thinking: Basic notions of data collection (sample and population) and sample design. Variables and distribution of variables and their comparisons.

6) Descriptive and inferential statistics: Descriptive parameters on the distribution of a variable. Understanding the main probability distributions (Bernoulli, Binomial, Poison, Uniform and Normal) and comparing samples using basic statistical tests (t-tests, one-way ANOVA).

7) Databases + Python: Structuring and creating a database with basic Python tools.

8) Analysis of databases by Pandas: Obtain and manipulate data from a database through the Pandas module.

9) Linear and multilinear regression: Correlations between variables and linear and multilinear predictive analysis.

10) Regression with time series and logistic regression: Aurocorrelation, autoregressive models and logistic model.

11) Supervised and unsupervised learning: The main models for classification problems in machine learning with the ScikitLearn library: KNN, KMeans, DBScan, MeanShift, etc.

12) Models of the decision tree family: Decision Trees, Random Forest, XGboost, Adaboost.

13) Models of the neural network family: Perceptrons, artificial neural networks of multiple layers of perceptrons and convolutional neural networks.

14) Natural language processing: Natural language processing (NLP) and its main concepts, procedure and algorithms.

Prof. Rodrigo Schmitt - Let's Code Academy (24 hours)

Intensive leveling course on logic and algorithms to prepare students for the 480h Data Science and Web Full Stack "mini-college" courses.

1) Propositional Logic and Boolean Operators. 2) Introduction to Algorithms and Programming Logic. 3) Sequential Structures. 4) Conditional Structures.

5) Repetition Structures. 6) Data Structures. 7) Functions. 8) Final project.

Prof. Rodrigo Schmitt - Let's Code Academy (20-hour MOOC: Massive Open Online Course)

1) Input and output: Reading user input and printing data.

2) Variables: Declaration and initialization of variables and data types: int, float, boolean, etc.

3) Conditional Structures: If, else and elif logical conditionals for building algorithms.

4) Loops: Repetition of blocks of code in for loops and while.

5) Data Structures: Manipulation of linked data in the form of lists, tuples and dictionaries.

6) JSON: Representation of data as JavaScript Object Notation.

7) Files and Data Persistence: Reading and writing data to files.

8) Functions: Function declaration, input and return parameters. Global and local variables.

9) Strings: Text manipulation.

10) Object Oriented: Paradigm of object-oriented programming, declaration of classes, attributes, methods, inheritance, etc.

11) APIs: Requesting web pages via requests library.

12) Getting started with Pandas: Work with Pandas to implement mathematical operations and structure data with pandas.Series and pandas.DataFrame.

13) Data manipulation with Pandas: Data wrangling and feature engineering.

14) The Matplotlib module: Production of charts and graph boards for prospecting insights and storytelling with data.

15) BLPAPI: Requesting finance data via Bloomberg's API.

16) Bloomberg Query Language: Python programming inside Bloomberg's BQuant software using the BQL module.

17) Bloomberg Query Plot: Production of charts and graphs using the BQPlot module.